SAPE: Spatially-Adaptive

Progressive Encoding for Neural Optimization

NeurIPS 2021

1 Tel Aviv University, 2 ETH Zurich, Switzerland

-Note: This page is best viewed on large screens-

1 Tel Aviv University, 2 ETH Zurich, Switzerland

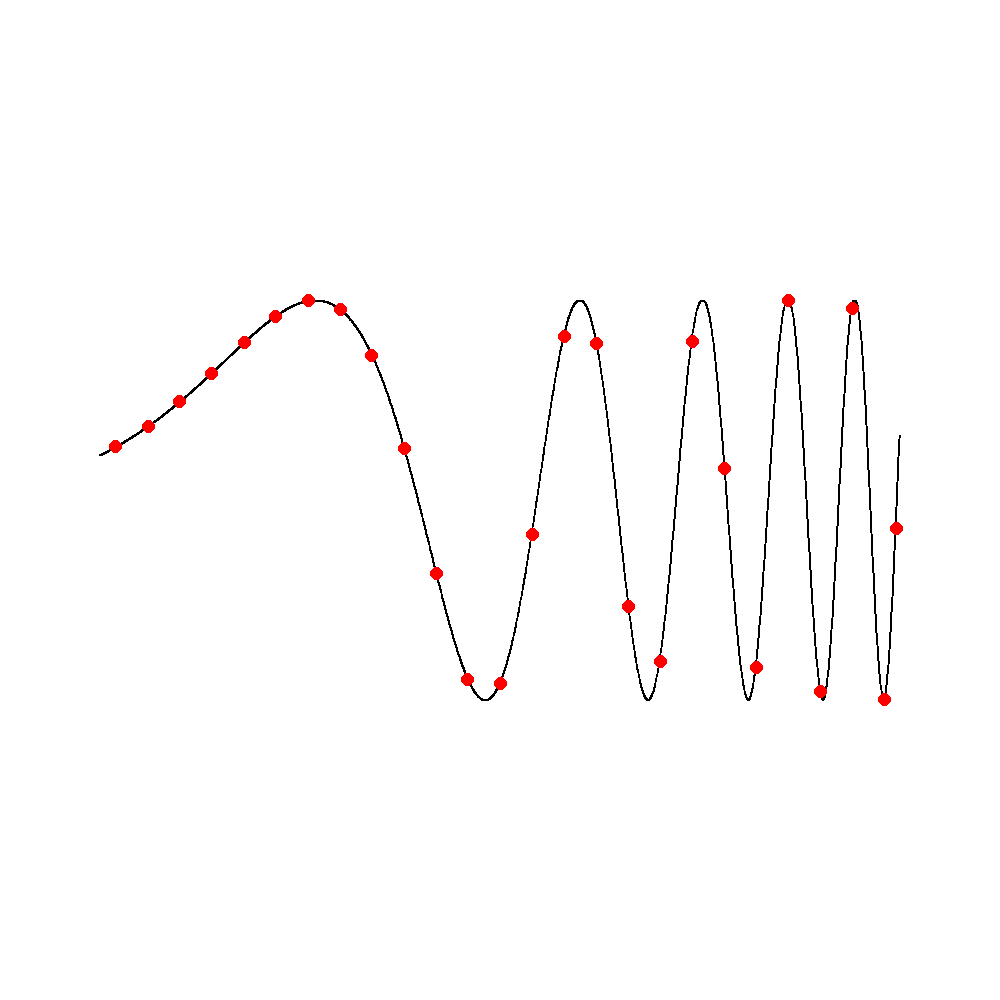

With SAPE, multilayer-perceptrons can faithfully represent implicit 1D signals of varying frequency.

In the example below the network attempts to learn the representation of a 1D function represented by the black curve.

The training samples are shown in red .

SAPE is able to represent a wide range of natural images without tuning the positional encoding frequency scale.

By uniformly sampling 25% of the original pixels in the image as a train set, SAPE is still able to reconstruct small details of the original signal.

Note that SAPE's performance is capped by the sampling rate (e.g: details smaller than the sampling rate are not guaranteed to be captured).

Below we show animations comparing the optimization progress per algorithm. For SAPE - you may hover over the animation to toggle a heatmap tracking the maximal frequency unmasked per position (low to high).

Further below we compare the results after convergence.

SAPE is also useful for learning the representation of 3d occupancy implicit functions.

In the examples below, points were sampled uniformly in space and near the shape surface.

Points are then assigned a binary label to determine if they fall within the interior of the surface volume or not.

Note that due to memory constraints, the result presented is a mesh converted from a neural implicit function using Marching Cubes with finite resolution.

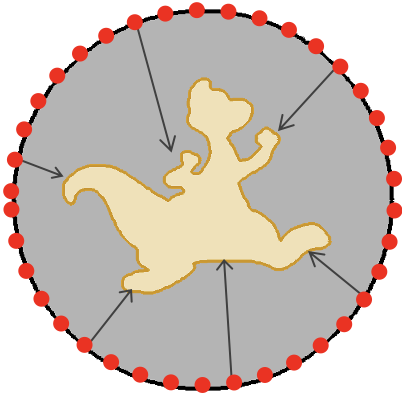

Finally, we demonstrate how SAPE can regularize a deformation process.

In the following task, for all shapes, SAPE is first pretrained to output the coordinates of a unit circle.

Then, the network is then optimized to trace the boundaries of a target shape by learning the offset from the circle boundary to the shape contour, per position.

The progressive nature of SAPE allows it to capture the global shape first, during the early steps when Spectral Bias is present and

the optimization is stable. As higher frequencies are revealed, SAPE is able to fit the finer details of the target shape.